lakeFS Architecture¶

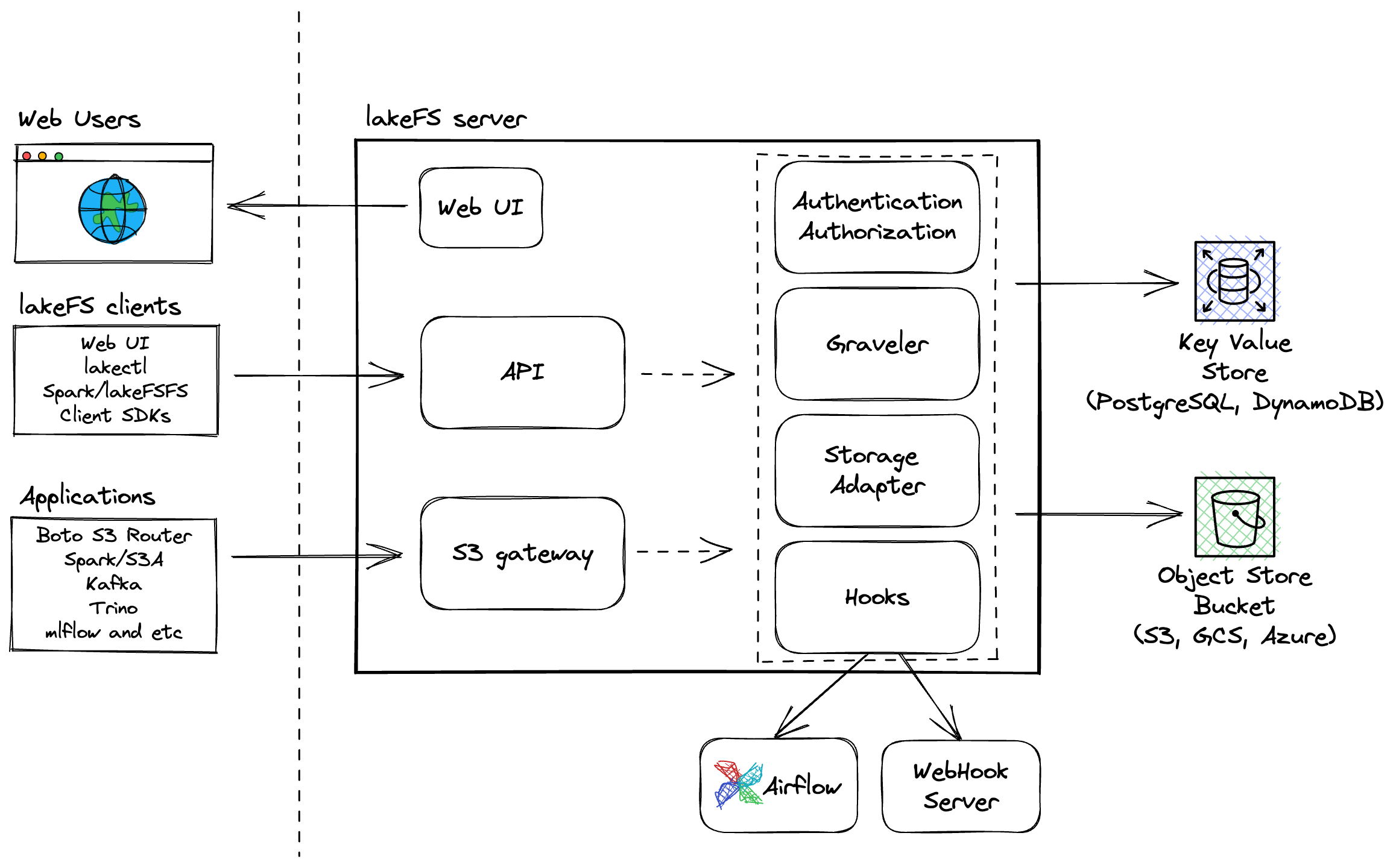

lakeFS is distributed as a single binary encapsulating several logical services.

The server itself is stateless, meaning you can easily add more instances to handle a bigger load.

Object Storage¶

lakeFS manages data stored on various object storage platforms, including:

- AWS S3

- Google Cloud Storage

- Azure Blob Storage

- MinIO

- NetApp StorageGRID

- Ceph

- Any other S3-compatible storage

With lakeFS Enterprise, you can leverage multiple storage backend support to manage data across multiple storage locations, including on-prem, hybrid, and multi-cloud environments.

Metadata Storage¶

An auxiliary Key Value storage is used for storing metadata, with supported databases including:

- PostgreSQL

- DynamoDB

- CosmosDB

- MemoryDB (or other Redis-compatible options) 🚀

See the installation guide and configuration reference on how to setup lakeFS with any of the above options

Learn More

More information about how lakeFS manages its versioning metadata is available in Versioning internals and Internal database structure

Load Balancing¶

Accessing lakeFS is done using HTTP. lakeFS exposes a frontend UI, an OpenAPI server, as well as an S3-compatible service (see S3 Gateway below). lakeFS uses a single port that serves all three endpoints, so for most use cases a single load balancer pointing to lakeFS server(s) would do.

lakeFS Components¶

Internally, the lakeFS server is composed of a few key pieces that make up the single binary that lakeFS is distributed as:

S3 Gateway¶

The S3 Gateway is the layer in lakeFS responsible for the compatibility with S3. It implements a compatible subset of the S3 API to ensure most data systems can use lakeFS as a drop-in replacement for S3.

See the S3 API Reference section for information on supported API operations.

OpenAPI Server¶

The Swagger (OpenAPI) server exposes the full set of lakeFS operations (see Reference). This includes basic CRUD operations against repositories and objects, as well as versioning related operations such as branching, merging, committing, and reverting changes to data.

Storage Adapter¶

The Storage Adapter is an abstraction layer for communicating with any underlying object store. Its implementations allow compatibility with many types of underlying storage such as S3, GCS, Azure Blob Storage, or non-production usages such as the local storage adapter.

See the roadmap for information on the future plans for storage compatibility.

Graveler¶

The Graveler handles lakeFS versioning by translating lakeFS addresses to the actual stored objects. To learn about the data model used to store lakeFS metadata, see the versioning internals page.

Authentication & Authorization Service¶

The Auth service handles the creation, management, and validation of user credentials and RBAC policies.

The credential scheme, along with the request signing logic, are compatible with AWS IAM (both SIGv2 and SIGv4).

Currently, the Auth service manages its own database of users and credentials and doesn't use IAM in any way.

Hooks Engine¶

The Hooks Engine enables CI/CD for data by triggering user defined Actions that will run during commit/merge.

UI¶

The UI layer is a simple browser-based client that uses the OpenAPI server. It allows management, exploration, and data access to repositories, branches, commits and objects in the system.

lakeFS Clients¶

Some data applications benefit from deeper integrations with lakeFS to support different use cases or enhanced functionality provided by lakeFS clients.

OpenAPI Generated SDKs¶

OpenAPI specification can be used to generate lakeFS clients for many programming languages. For example, the Python lakefs-sdk or the Java client are published with every new lakeFS release.

lakectl¶

lakectl is a CLI tool that enables lakeFS operations using the lakeFS API from your preferred terminal.

lakeFS Mount (Everest)¶

Info

lakeFS Mount is available in lakeFS Cloud and lakeFS Enterprise

lakeFS Mount allows users to virtually mount a remote lakeFS repository onto a local directory. Once mounted, users can access the data as if it resides on their local filesystem, using any tool, library, or framework that reads from a local filesystem.

Spark Metadata Client¶

The lakeFS Spark Metadata Client makes it easy to perform operations related to lakeFS metadata, at scale. Examples include garbage collection or exporting data from lakeFS.

lakeFS Hadoop FileSystem¶

Thanks to the S3 Gateway, it's possible to interact with lakeFS using Hadoop's S3AFIleSystem, but due to limitations of the S3 API, doing so requires reading and writing data objects through the lakeFS server. Using lakeFSFileSystem increases Spark ETL jobs performance by executing the metadata operations on the lakeFS server, and all data operations directly through the same underlying object store that lakeFS uses.

How lakeFS Clients and Gateway Handle Metadata and Data Access¶

When using any of the native integrations such as the Python SDK, lakectl, Everest or the lakeFS Spark client - these clients communicate with the lakeFS server to retrieve metadata.

For example, they may query lakeFS to understand which version of a file is needed or to track changes in branches and commits. This communication does not include the actual data transfer, but instead involves passing only metadata about data locations and versions.

Once the client knows the exact data location from the lakeFS metadata, it directly accesses the data in the underlying object storage (potentially using presigned URLs) without routing through lakeFS.

Example

if data is stored in S3, the Spark client will retrieve the S3 paths as pre-signed URLs from lakeFS, then directly read and/or write to those URLs in S3 without involving lakeFS in the data transfer.